Training Generative Flow Networks to select optimal neural network hyperparameters

By Beck LaBash and Sebastian Sepulveda

Final project for CS4180: Reinforcement Learning

Machine learning practitioners know the struggle all too well: you've designed your neural network architecture, prepared your data, and now you're staring at a blank configuration file wondering, "What hyperparameters should I use?" Should the learning rate be 0.01 or 0.001? Will a batch size of 32 or 64 work better? How many epochs should I train for?

Traditionally, answering these questions involves either educated guesswork based on experience or computationally expensive grid searches. Either way, it's inefficient and often frustrating. That's why my colleague Sebastian Sepulveda and I decided to explore a more elegant solution using Generative Flow Networks (GFNs).

The Hyperparameter Challenge

Let's frame the problem formally: given a machine learning model

Sounds straightforward, but there's a catch: the search space grows exponentially with each additional hyperparameter. Grid search quickly becomes impractical, and gradient-based approaches often get trapped in local minima.

Moreover, recent research by Liao et al. (2022) suggests that multiple hyperparameter configurations can yield similar accuracy while differing significantly in other metrics like training time or inference latency. This means we don't just need the single best configuration—we need a diverse set of high-performing options.

Enter Generative Flow Networks

Generative Flow Networks offer an intriguing solution. Unlike traditional reinforcement learning methods that greedily maximize rewards, GFNs sample diverse trajectories in proportion to their reward. This property makes them ideal for our hyperparameter tuning problem.

At a high level, GFNs construct a flow network where:

- States represent partial hyperparameter configurations

- Actions add a specific hyperparameter value

- Terminal states are complete configurations

- Rewards are based on model performance

The network learns a flow function

From this flow function, we derive a policy that samples hyperparameter configurations with probability proportional to their performance.

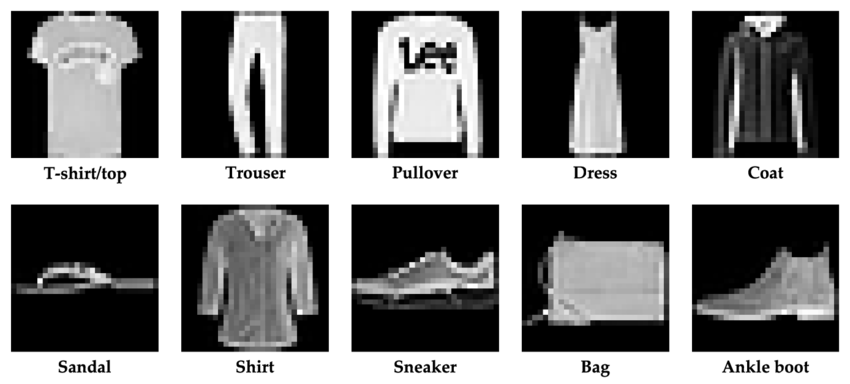

Our Experiment: Fashion-MNIST

To test our approach, we applied it to the task of tuning hyperparameters for a feed-forward neural network on the Fashion-MNIST dataset.

We focused on three critical hyperparameters:

- Learning rate

- Batch size

- Number of training epochs

A naive implementation would require training thousands of neural networks—a computationally prohibitive approach. Instead, we created a surrogate model: we conducted an initial grid search using Ray Tune, then trained a deep neural network to predict test accuracy based on hyperparameter values. This surrogate achieved a validation loss of just 0.0006, allowing us to rapidly estimate the performance of any configuration.

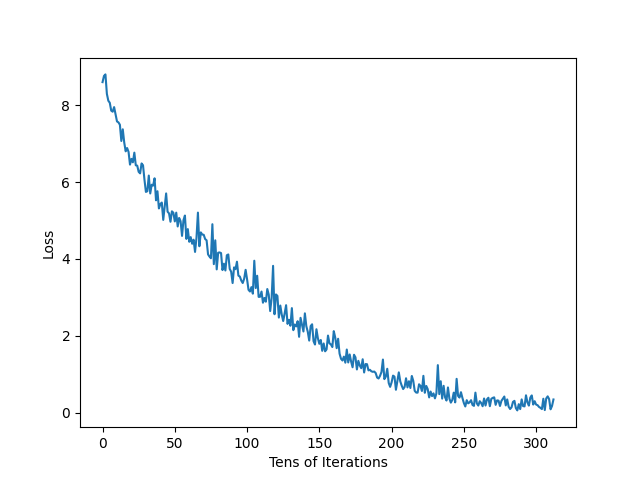

Our forward policy network was trained on 10,000 sample trajectories with a learning rate of

Results and Analysis

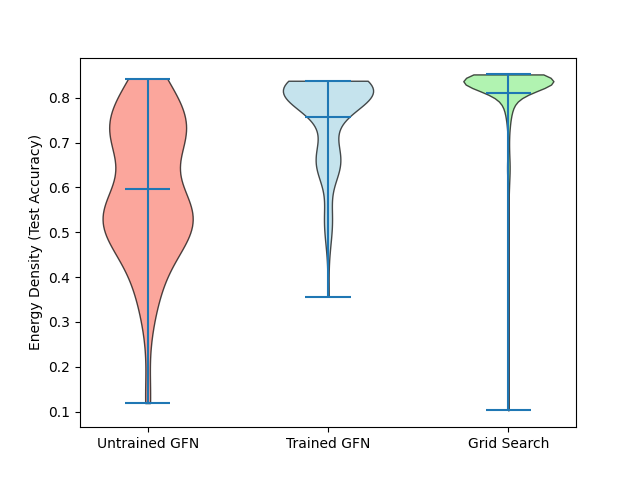

To evaluate our method, we sampled 1,000 trajectories from both trained and untrained policies, then compared their distributions with our grid search results:

The results are encouraging—our trained GFN samples high-performing hyperparameter configurations much more frequently than the untrained version. This confirms that the network is learning to match the flow from our energy function as intended.

You might notice that both GFN distributions have slightly lower modes than the grid search. This is expected due to the lossy nature of our surrogate model and policy network. Perfect alignment would only occur with a perfect surrogate, but the smoothing effect actually has benefits—it helps explore the continuous hyperparameter space rather than just the discrete points from our initial grid search.

Conclusions and Future Directions

This final project demonstrates that Generative Flow Networks offer a promising approach to hyperparameter optimization. By sampling diverse, high-performing configurations, they provide machine learning practitioners with multiple viable options—each potentially offering different trade-offs in terms of accuracy, training time, and inference speed.

The approach has several advantages:

- It scales more efficiently than grid search as the number of hyperparameters increases

- It avoids the local minima problems of gradient-based methods

- It provides a diverse set of high-performing configurations rather than a single "best" answer

In our current implementation, we focused on three hyperparameters for a simple feed-forward network, but the framework is general enough to handle more complex architectures and larger hyperparameter spaces.

For those interested in exploring this approach further, we've made our code publicly available on GitHub.