By Beck LaBash and Oliver Marker

Final project for CS4100: Artificial Intelligence

Running is one of the most accessible physical activities available - all you need is a pair of shoes and some open space. But despite its simplicity, perfecting your running form can be surprisingly complex. Even subtle inefficiencies in form can lead to reduced performance and, worse, injury over time.

The current gold standard for analyzing running form involves expensive multi-camera systems, motion capture equipment, and hours of expert analysis - placing professional-grade form assessment far beyond the reach of most runners. Our research aimed to democratize this process by creating a system that needs only what most people already carry in their pockets: a smartphone camera.

Learning Running Form from Videos

The core idea of our work is simple yet powerful: we train a machine learning model to understand the nuances of running form from monocular video sources. To accomplish this, we needed to:

- Create a diverse dataset of running videos

- Extract 2D pose information from these videos

- Fine-tune a pre-trained human motion model on this running-specific data

- Develop simple classification methods to identify form deficiencies

The result is a system that can identify common running form issues (like overstriding) with remarkable accuracy, even with minimal training examples.

Building a Running Dataset

One of our first challenges was the absence of a comprehensive, publicly available dataset of running videos. We solved this by manually curating a collection of 756 unlabeled running videos from YouTube and Instagram. These clips captured a diverse range of runners spanning different genders, skill levels, speeds, recording qualities, and distances to the subject.

From this larger dataset, we created two smaller, labeled datasets:

- 18 videos of professional runners

- 18 videos labeled as either "overstriding" or "optimal" running form

These labeled sets would serve as the foundation for our downstream classification tasks.

From 2D to 3D: Extracting and Processing Pose Data

Our pipeline begins with RTMPose-M, a CNN-based model that extracts 2D poses from video frames. We process the extracted keypoints to match the format required by our backbone model, MotionBERT.

MotionBERT is a dual-stream transformer architecture initially trained on the task of lifting 2D pose data to 3D pose estimations. Its dual-stream attention mechanism allows it to track both:

- A single keypoint across all timesteps

- All keypoints within each timestep

This architecture is particularly well-suited for understanding human movement patterns over time.

Fine-tuning for Running-Specific Representations

We fine-tuned a lighter version of MotionBERT (about 16M parameters) on our curated running dataset. The model learns by predicting 3D pose information, though we only have access to 2D data. Following previous work, we calculate the loss based on reprojecting the predicted 3D poses back to 2D:

Where:

After fine-tuning, our model produces a rich embedding space that captures the subtle patterns of human running form.

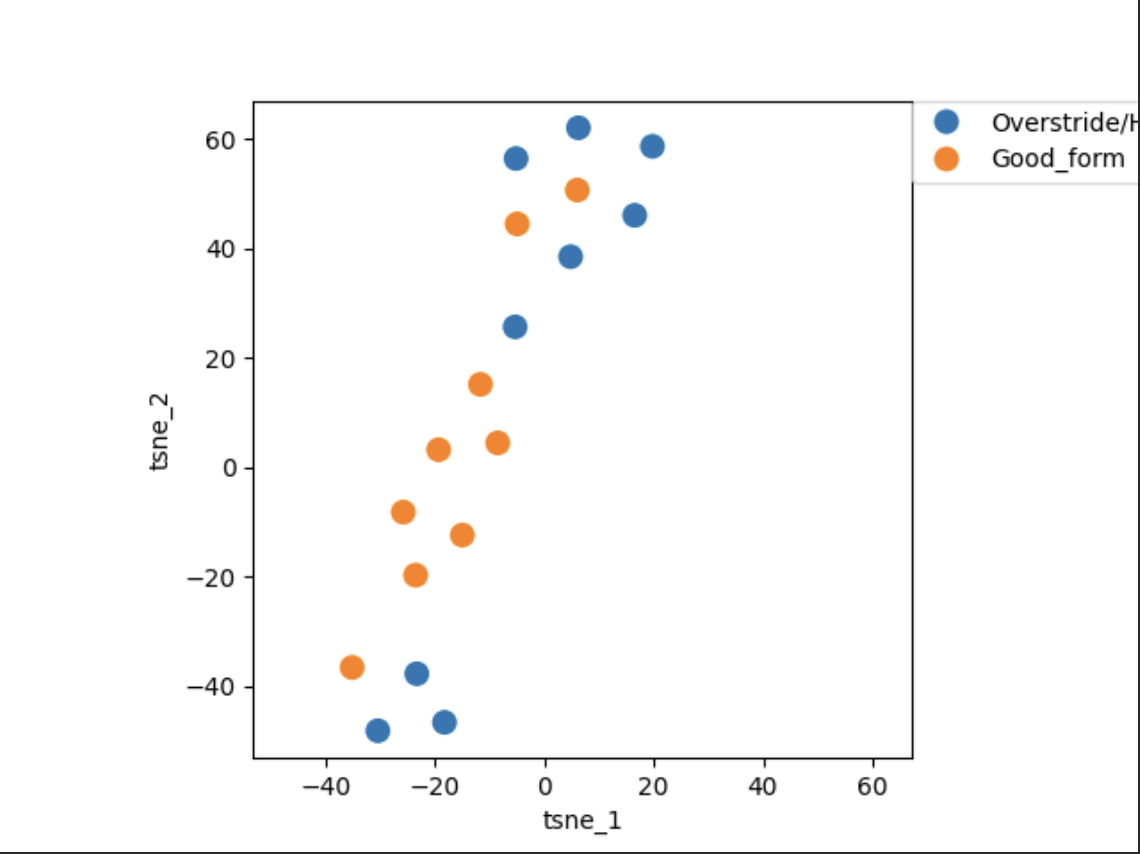

Detecting Overstriding with Few Examples

Overstriding is a common running deficiency where a runner's foot lands too far in front of their center of mass, creating excessive impact forces and often leading to injury. To detect this issue, we created a simple SVM classifier trained on our labeled dataset of just 18 examples (9 overstriding, 9 optimal form).

Despite the small sample size, our classifier achieved impressive results:

- 89.1% average accuracy on training data across 4-fold cross-validation

- 72.5% average accuracy on test data

This performance demonstrates the quality of our learned running form representations - they capture meaningful differences that allow even simple classifiers to perform well with minimal examples.

Limitations and Future Work

Our current system has several limitations worth noting:

-

Our training data is skewed toward professional and experienced runners due to the nature of available online content. This affects the model's ability to differentiate between various running forms in the embedding space.

-

Videos with multiple runners or occlusions can cause tracking issues that impact our results.

In future work, we aim to:

- Curate an even more diverse dataset of running styles

- Develop more robust multi-subject tracking methods

- Explore additional form deficiencies beyond overstriding

Conclusion

Our research demonstrates that it's possible to learn meaningful representations of human running form by fine-tuning motion encoders on curated running datasets. These representations enable accurate classification of common deficiencies at a fraction of the cost of traditional methods.

By bringing professional-grade form analysis to anyone with a smartphone, we hope to help more runners improve their efficiency and reduce their risk of injury.

The code for fine-tuning and downstream experiments is available on GitHub.

This blog post is based on research conducted with Oliver Marker at Northeastern University.